1Beijing Institute of Technology, 2University of Birmingham, 3Beijing Jiaotong University,

in CVPR 2025

Effective Class Incremental Segmentation (CIS) requires simultaneously mitigating catastrophic forgetting and ensuring sufficient plasticity to integrate new classes. The inherent conflict above often leads to a back-and-forth, which turns the objective into finding the balance between the performance of previous (old) and incremental (new) classes. To address this conflict, we introduce a novel approach, Conflict Mitigation via Branched Optimization (CoMBO). Within this approach, we present the Query Conflict Reduction module, designed to explicitly refine queries for new classes through lightweight, class-specific adapters. This module provides an additional branch for acquisition of new classes while preserves the original queries for distillation. Moreover, we develop two strategies to further mitigate the conflict following the branched structure, i.e., the Half-Learning Half-Distillation (HDHL) over classification probabilities, and the Importance-Based Knowledge Distillation (IKD) over query features. HDHL selectively engages in learning for classification probabilities of queries that match the ground truth of new classes, while aligning unmatched ones to the corresponding old probabilities, thus ensuring retention of old knowledge while absorbing new classes via learning negative samples. Meanwhile, IKD assesses the importance of queries based on their matching degree to old classes, prioritizing the distillation of important features and allowing less critical features to evolve. Extensive experiments in Class Incremental Panoptic and Semantic Segmentation settings have demonstrated the superior performance of CoMBO.

We propose a Half-Learning Half-Distillation mechanism that applies soft distillation for old classes and binary-based optimization for new classes to effectively balance retention and acquisition.

An extra Query Conflict Reduction module is proposed to refine queries for new classes while preserving unrefined features, which enables Importance-based Knowledge Distillation for remembering the key features.

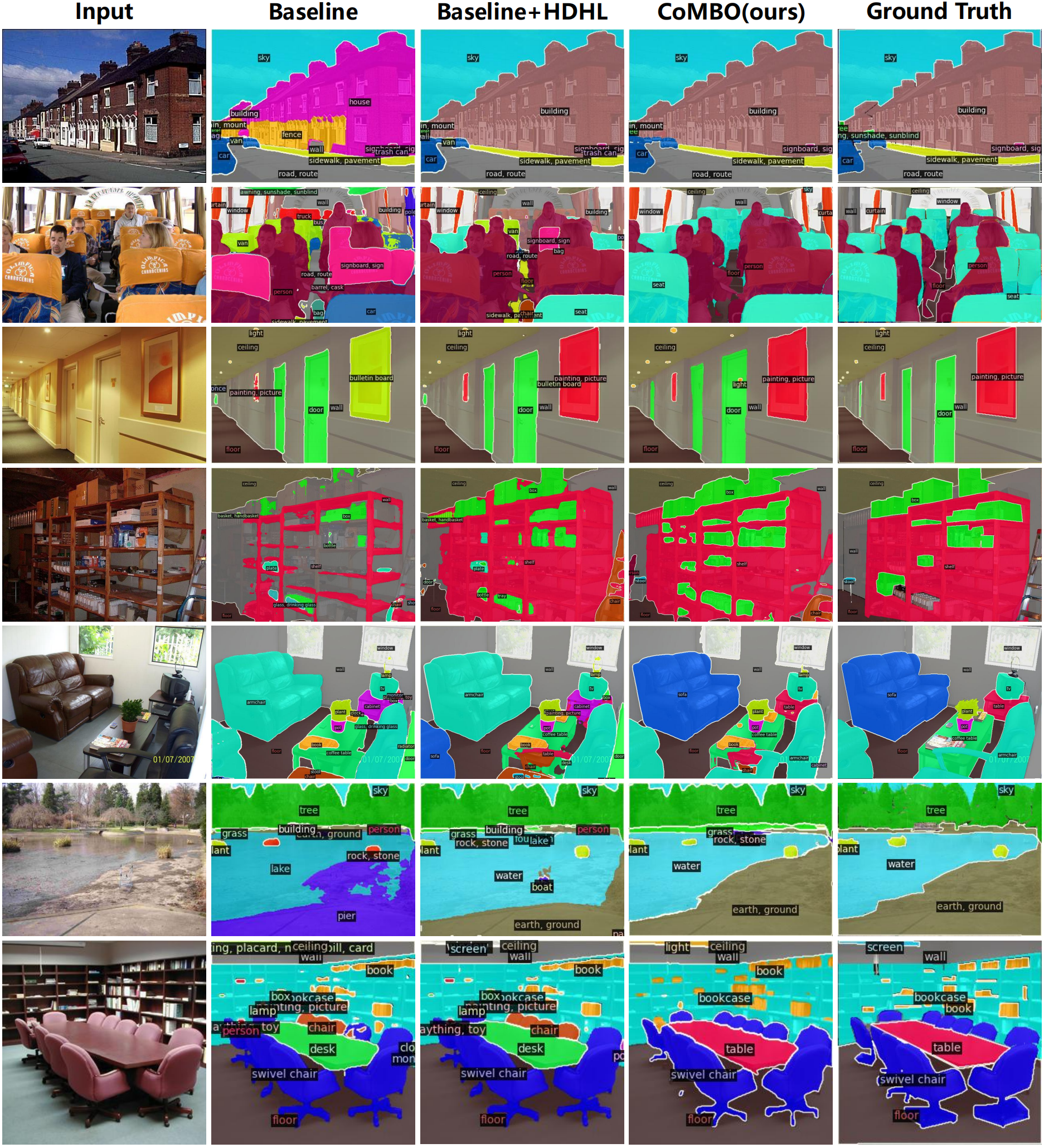

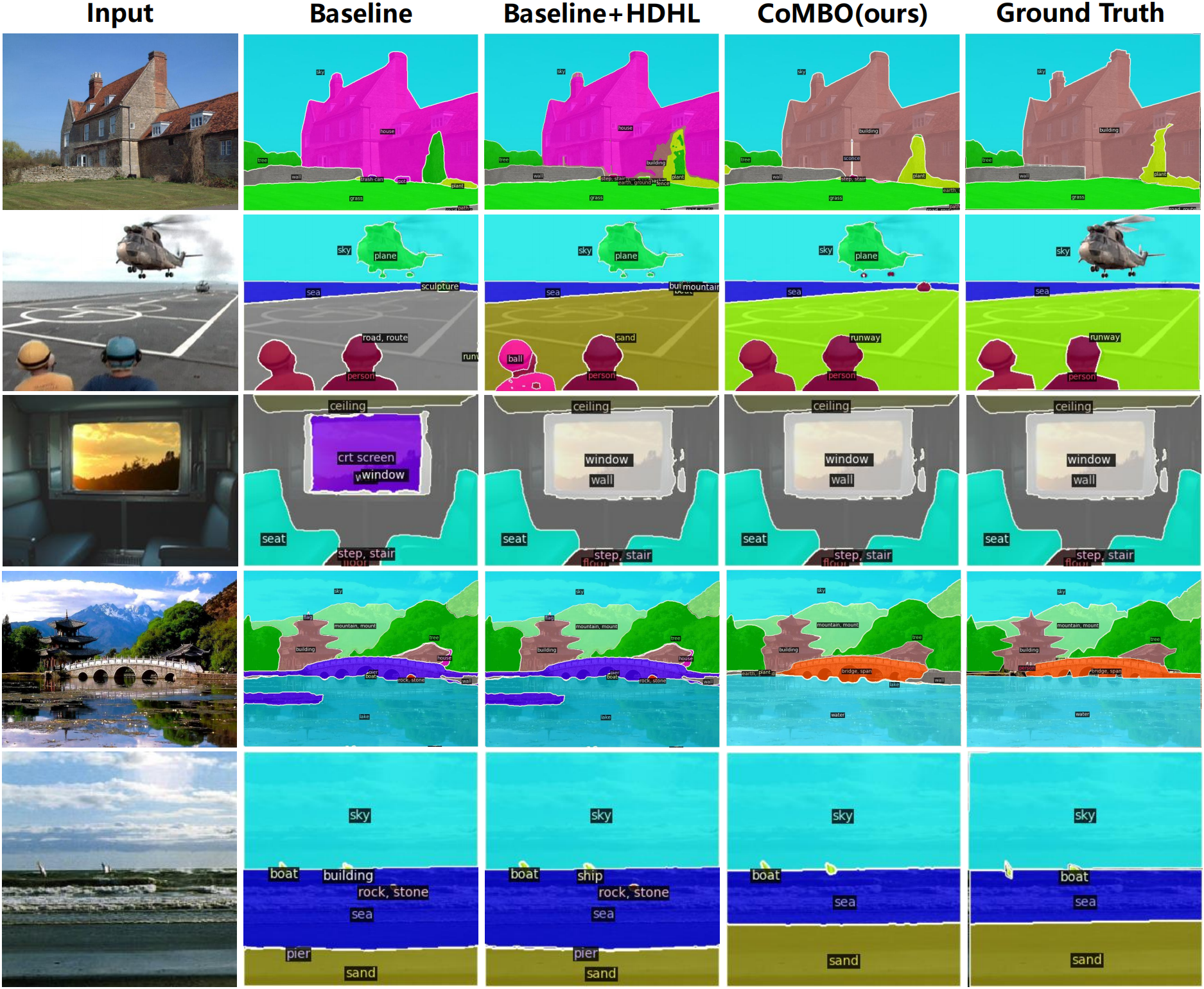

Through extensive quantitative and qualitative evaluations on the ADE20K dataset, we demonstrate the state-of-the-art performance of our model in both Class Incremental Semantic and Panoptic Segmentation tasks.

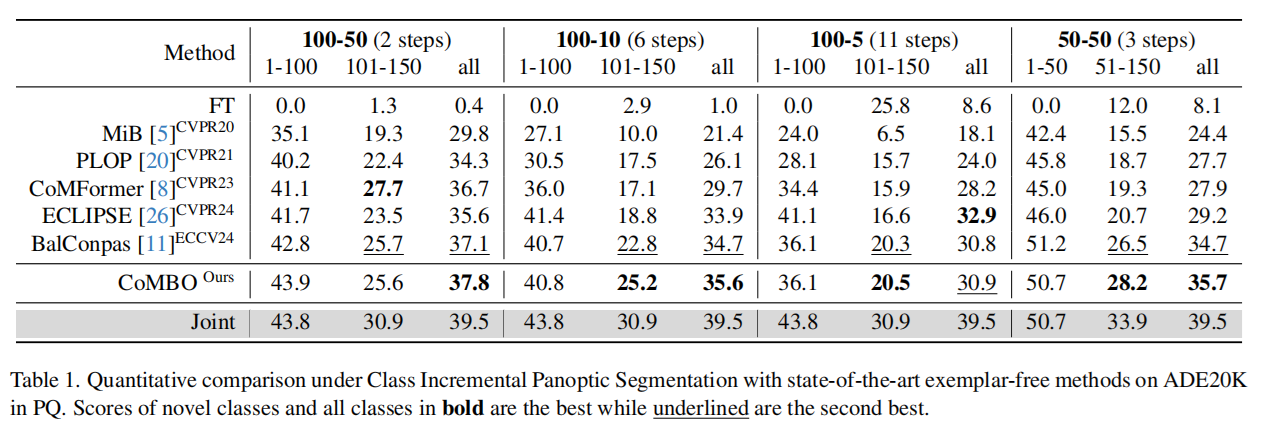

We evaluated our approach alongside current exemplar-free approaches on the ADE20K dataset within the framework of Class Incremental Panoptic Segmentation.

The performance of our CoMBO surpasses other methods in most of the scenarios, particularly in the challenging 100-10 sub-task, we achieve 35.6% PQ with at least 3.4% of advantage on incremental classes compared to the previous state-of-the-art methods, demonstrating the effectiveness from reducing the conflicts.

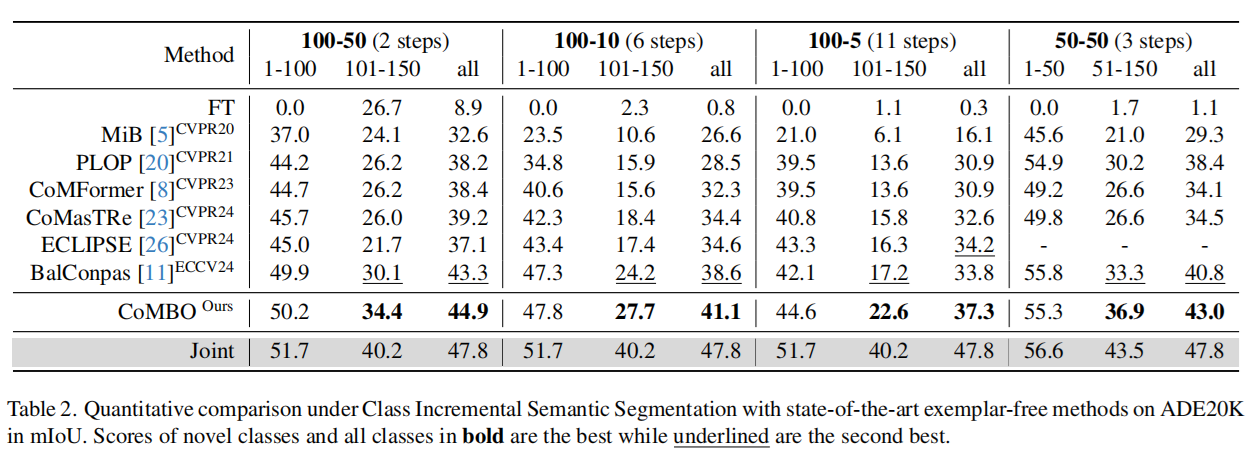

We further extended our evaluation to the semantic segmentation benchmark, comparing our approach against previous methods on the ADE20K dataset.

Our approach consistently outperforms previous methods in all tested scenarios.

This work was supported by the National Key R&D Program of China (Grant 2022YFC3310200), the National Natural Science Foundation of China (Grant 62472033, 92470203), the Beijing Natural Science Foundation (Grant L242022), the Royal Society grants (SIF\R1\231009, IES\R3\223050) and an Amazon Research Award.

@article{fang2025combo,

title={CoMBO: Conflict Mitigation via Branched Optimization for Class Incremental Segmentation},

author={Fang, Kai and Zhang, Anqi and Gao, Guangyu and Jiao, Jianbo and Liu, Chi Harold and Wei, Yunchao},

journal={arXiv preprint arXiv:2504.04156},

year={2025}

}